aLive

Fast Prototyping and Blending of Visuals for Live Performances

I enjoy VJing in my free time, mostly in parties and dance performances. In order to create an unique visual experience, you usually mix two or more layers of videos (or images) to produce a new one using a method called blending.

For example, consider the following images, a tree on top of a bleak twilight, and an aurora borealis at night:

Much like putting two semi-transparent slides one on top of another, we can try to blend both images, where the resulting image is 50% the first one, and 50% the second one. However, the result looks rather plain and unrealistic:

Fortunately, there are many other –more effective– ways of combining (blending) these images, and are called Blend Modes. Consider the following six modes:

Each Blend Mode operates differently on both images, emphasizing –or hiding– dcertain details of each input image. I think the last one, Hardlight, works best in this case, as it preserves the tree silhouette but gets rid of the twilight:

Mastering Blend Modes can be tricky, even for Photoshop experts, as choosing the most appropriate Blend Mode always depends on the visual attributes (color, contrast, opacity) of each image. With videos, it is even harder, as colors and lighting conditions can vary between frames.

The Problem

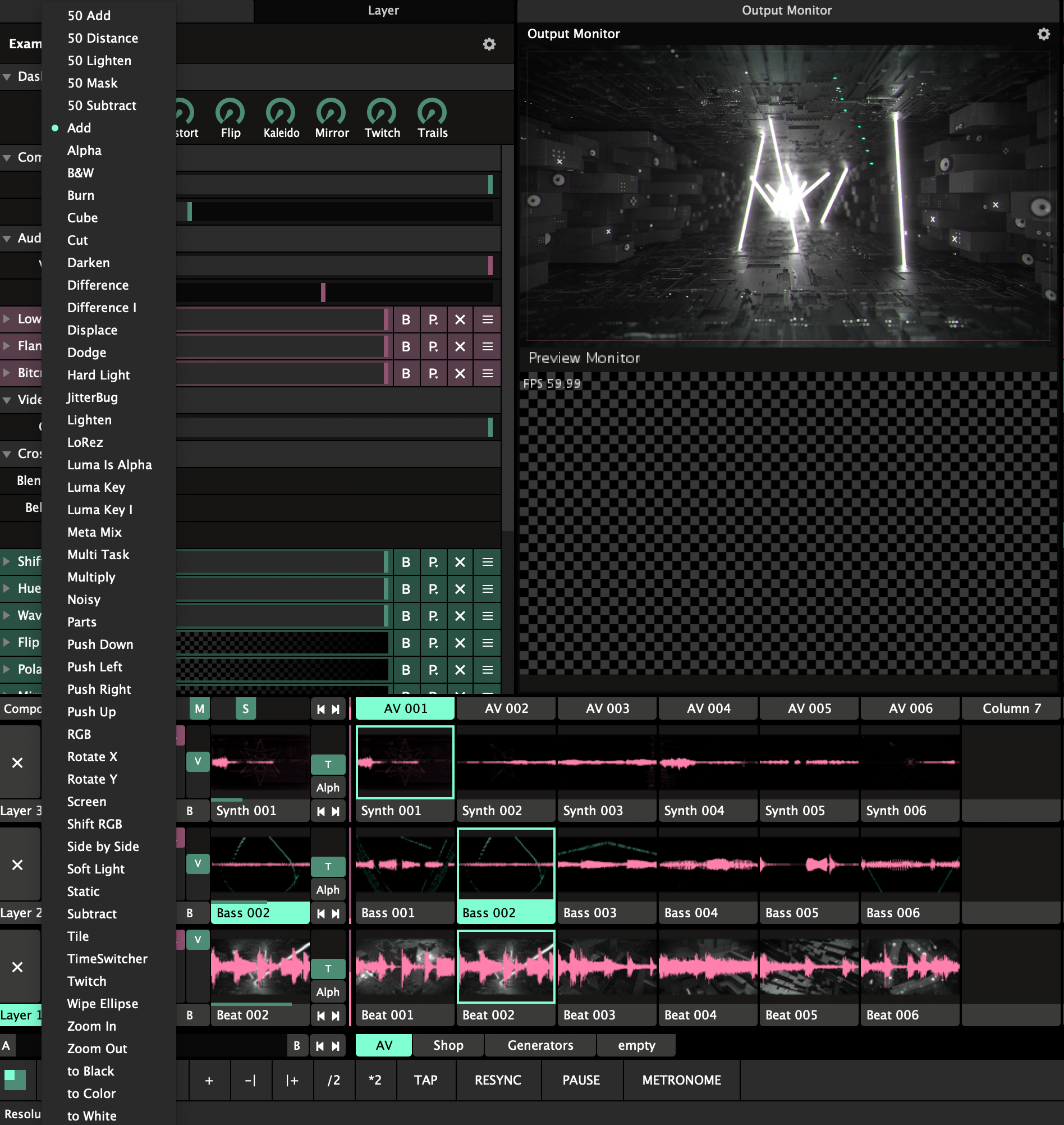

Usually, you use software such as Arena, VDMX, MadMapper, or TouchDesigner for VJing. Any of these tools offers a robust set of features for blending videos and images as layers that you can stack on top of each other. For instance, Arena offers 50 different Blend Modes for each layer:

In addition, there are many other parameters that can be modified for each source video/image: Playback Speed, Opacity, Brightness, Cue Points, Blend Mix, etc. This level of customization is great, but it gets in the way of creating a new VJ set from scratch when you have a large library of sources (videos and images).

Let's do some napkin math: With 200 different sources, we have 19,900 unique pairs of videos. If we multiply the number of pairs by 50 blending modes, we have 995,000 different blending possibilities to choose from, which is an onerous task with current VJ tools where each source needs to be dragged to a layer, and each blending mode needs to be selected from a combo box.

So I wondered... What could I do to facilitate the process of selecting a VJ set, as well as identifying the best blending combinations among a large library of videos and images?

Enter aLive

For a pair of live performance projects that I was working on, I needed a more agile method of prototyping the kind of visual experience that I had in mind, as well as assess which clips/images were more suitable for blending.

This was an UX/UI design problem, as current VJing tools, while excellent for performing a live VJ set, were sub-optimal for prototyping a visual experience from a large library of clips, as well as choosing the best tracks, blending modes, and other.

UI Design

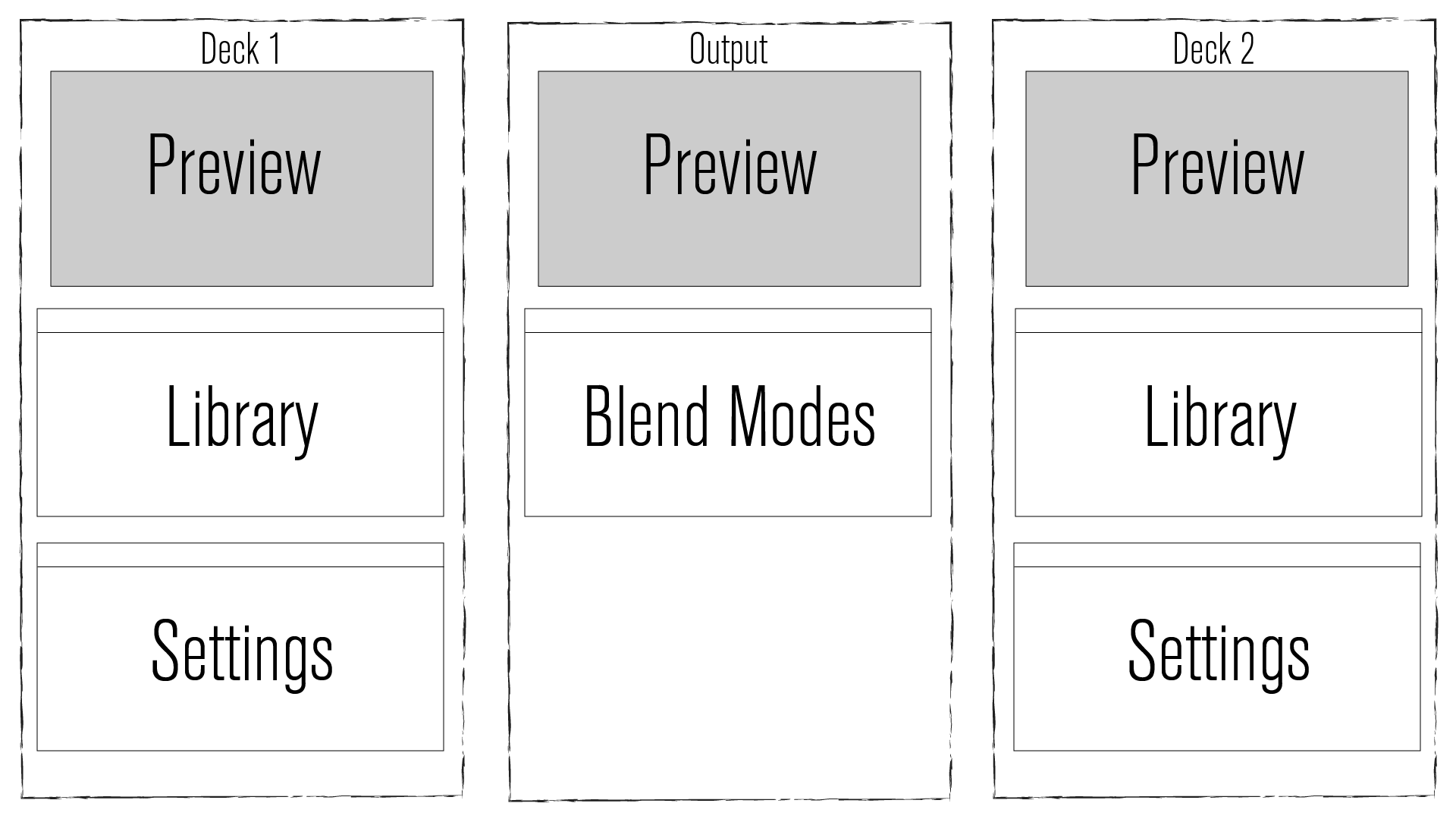

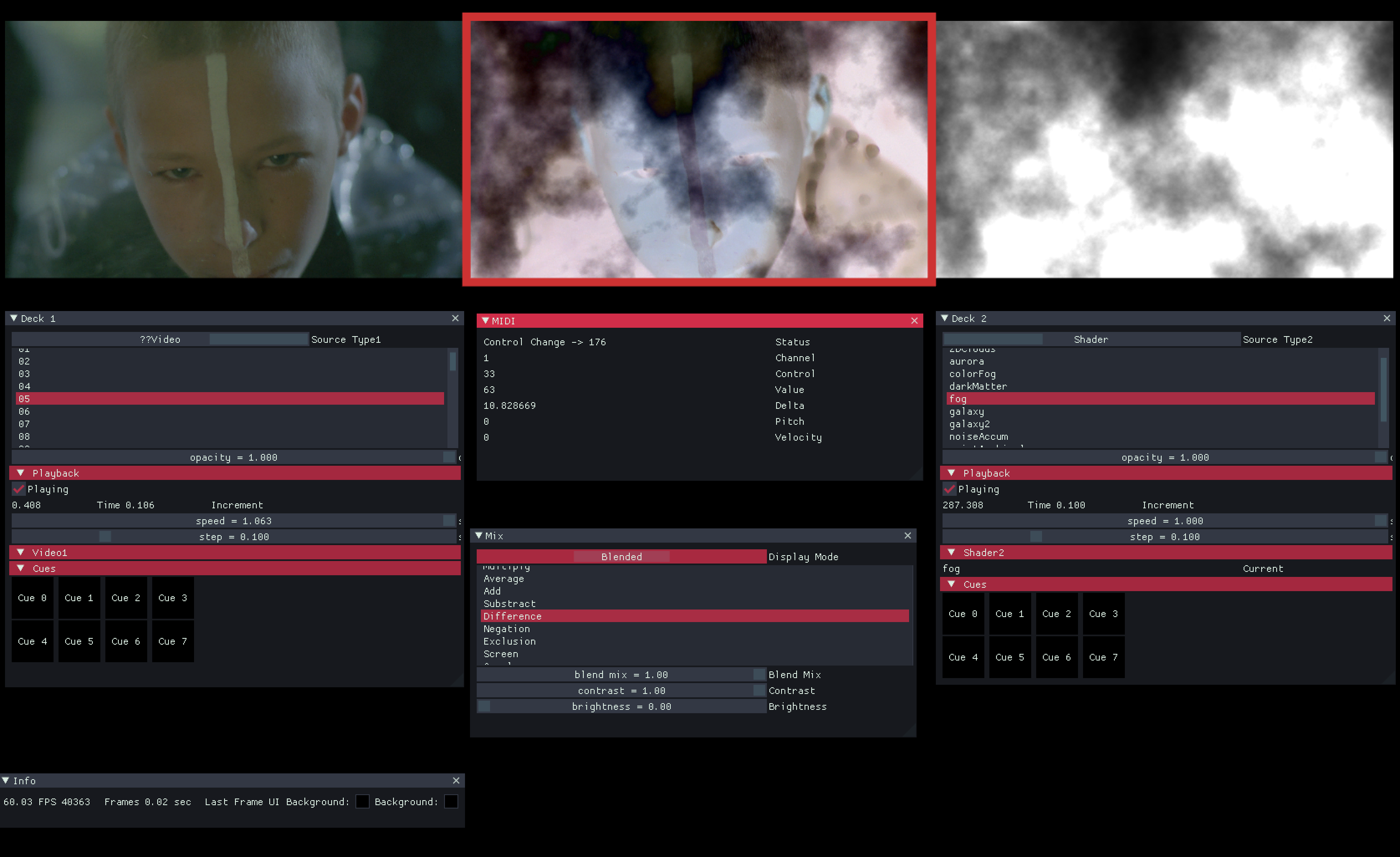

The User Interface is inspired on DJ software, where you have two decks that you can cross-fade, with a live preview of the blended result (Output) in the middle:

Each Deck has a preview of the track, a library navigator, and customizable settings for the clip. In the Output panel you can see the different Blend Modes available. The UI was developed with the Dear IMGUI, a C++ toolkit that implements the Immediate Mode UI paradigm and offers a myriad of customizable UI widgets.

UX Design

Instead of keyboard/touchpad and classical UI widgets, a tangible User Interface can be valuable for this kind of specialized tasks. For instance, a DJ controller has several buttons, jog platters, knobs, and faders that can be easily mapped to software actions using the MIDI transport protocol. I had a cheap controller on hand, a Pioneer DDJ-200 that I employed for interaction with the tool:

Image Processing

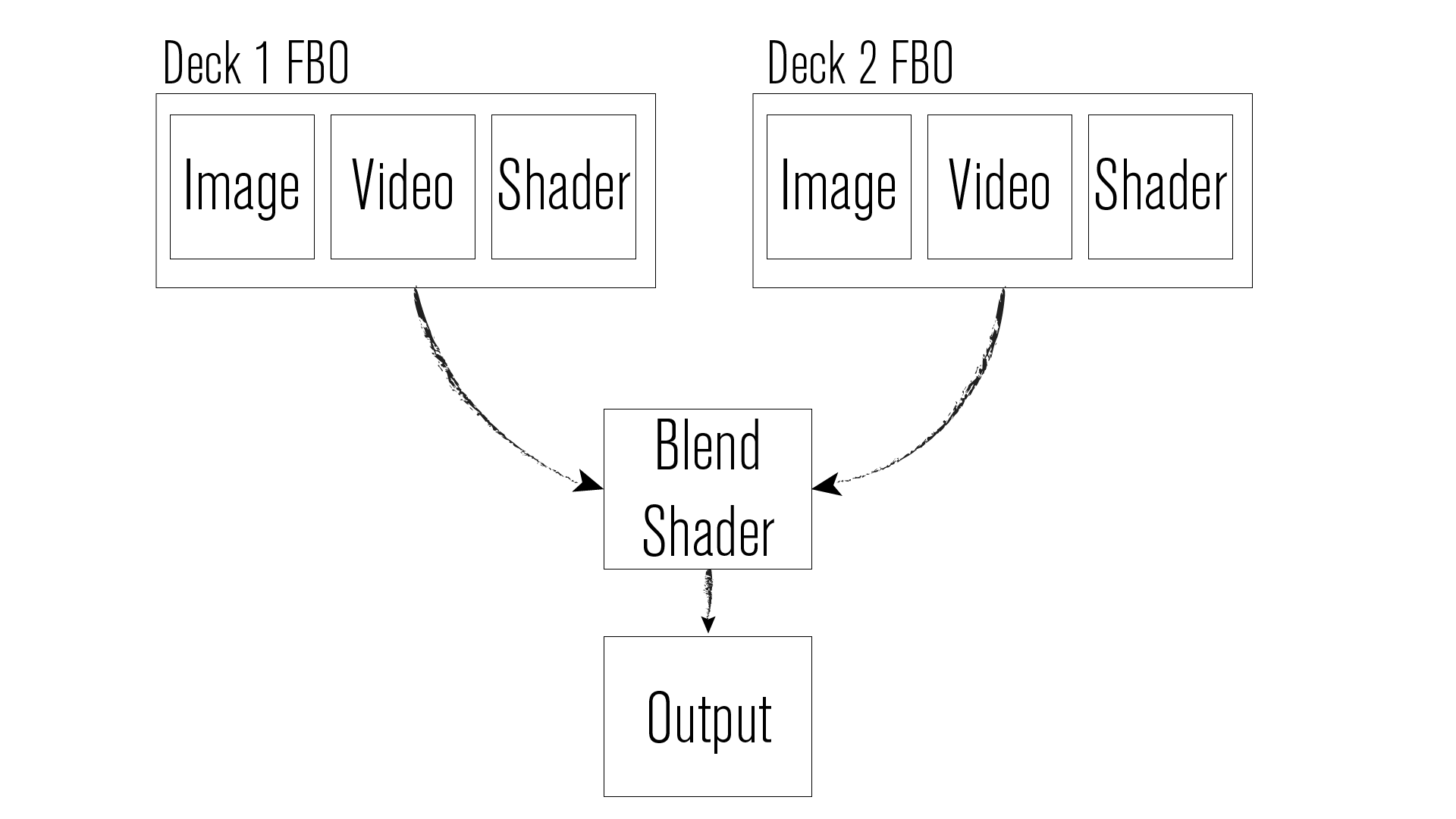

aLive supports three kind of sources: Images, Videos, and Shaders, which are small programs for computer-generated visuals and special effects (see ShaderToy).

Both Decks loads each frame of a source as a Frame Buffer Object (FBO), which is a texture that is sent to the Blend Shader, a GPU program that blends both FBOs using 26 different blending modes.

Features

- 26 different Blending Modes using a Fragment Shader

- 2 Decks for mixing Shaders, Images, and Videos

- 8 Programmable Cues for each deck

- Fine-grained control of Speed for Videos and Shaders

- Different Output resolutions (720p, 1080p, 4K, Custom)

- Controller Mapping for Pioneer DDJ-200, as well as on-screen UI controls

- Frame by Frame search using jog plates